Can Large Language Models Help Us Combat Online Misinformation?

We are happy to announce that the first article of our department has been published in the frontiers journal!

The institute announces the publication of its first article, a study on automated fact-checking and the role of large language models (LLMs) like GPT-4 in combating misinformation, authored by Dorian Quelle and Prof. Alexandre Bovet.

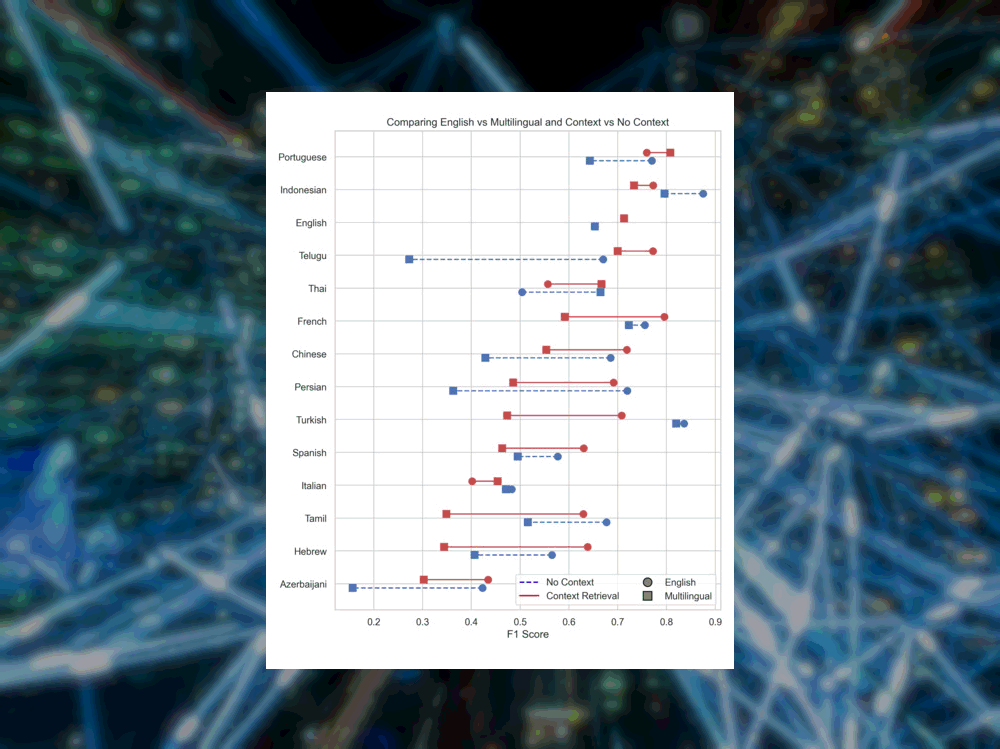

As the quantity of misinformation online eclipses the capacity fact-checkers, LLMs may hold the promise of verifying content automatically. The study investigates into the performance of LLMs in fact-checking tasks, highlighting their ability to retrieve contextual information to asses the veracity of a statement even to substantiate their findings. The study calls for caution, showing that while GPT-4 is superior to GPT-3, substantial variations in accuracy exist depending on the nature of the queries and the truthfulness of the claims. The findings emphasise the potential of LLMs in fact-checking but caution against their variable accuracy, advocating for continued research to fully understand their capabilities and limitations.

The institute would like to congratulate Dorian Quelle and Prof. Alexandre Bovet for their publication.

Link to the article: Frontiers | The perils and promises of fact-checking with large language models (frontiersin.org)